2 Evaluation in Simulation-Based Education

Simulation-based education has emerged as a pivotal strategy in healthcare training, providing an innovative platform for learners to develop critical clinical skills, improve teamwork, and enhance decision-making abilities in a risk-free environment. As healthcare systems increasingly face complex challenges, including the need for improved patient safety and quality of care, the evaluation of simulation programs has gained importance in ensuring their effectiveness and sustainability.

Evaluating simulations in healthcare involves assessing both the educational outcomes for participants and the impact on patient care. Various methodologies, including formative and summative assessments, can be employed to measure knowledge acquisition, skill proficiency, and behavioral changes. Additionally, evaluations often consider participant satisfaction, retention of skills over time, and the transfer of learned competencies to real-world clinical settings.

Research has shown that well-structured simulation experiences can lead to significant improvements in clinical performance and confidence among healthcare professionals. However, to optimize these educational interventions, it is essential to systematically evaluate their design, implementation, and outcomes. This includes examining factors such as fidelity of the simulation, alignment with curriculum objectives, and integration with other educational modalities.

As the healthcare landscape continues to evolve, ongoing evaluations of simulation-based training are crucial for ensuring that these programs remain relevant, effective, and aligned with the competencies required in modern healthcare practice. By fostering a culture of continuous improvement, evaluations contribute not only to the enhancement of educational practices but also to the overall goal of delivering safer and more effective patient care.

Learning Objectives:

-

Utilize theories and standards of practice in simulation education as frameworks for evaluation of simulation-based education.

- Examine evaluation strategies and instruments based on the intended purpose of evaluation.

- Describe tools used in evaluations of simulation-based education in healthcare professions.

- Analyze the current state of evaluations in simulation-based education in healthcare professions.

I. Models/Frameworks for Evaluation of SBE:

Nurse educators efforts towards evaluation in education need to be based on theories or educational frameworks. The following theories and frameworks used in designing evaluation processes of simulation-based education( SBE):

- NLN/Jeffries Simulation Theory provides information about what needs to be considered in preparing and delivering simulations, how to develop and deliver the simulation and what to consider for outcomes. It elucidates the roles of the people involved in any simulation experience and points to areas in which outcomes can be measured ( Jeffries, 2022). The major components of the theory are supported by extensive literature and empirical evidence after its publication ( Adamson, 2015) and additional systematic review of literature recommends a need to examine elements that need to be enhanced such as curriculum integration and evaluation of outcomes ( Jeffries, 2022).

NLN Jeffries Simulation Theory

Examples of evaluation studies using the NLN Jeffries Simulation Theory

- Bowden, A., Traynor, V., Chang, H. R., & Wilson, V. (2022). Beyond the technology: Applying the NLN Jeffries Simulation Theory in the context of aging simulation. Nursing forum, 57(3), 473–479. https://doi.org/10.1111/nuf.12687

…..Thinking beyond

How will you plan the evaluation of your simulation education program using the NLN/Jeffries Simulation Theory? What are aspects of the simulation-based education that you will need to evaluate?

The NLN Jeffries Simulation Theory as a model for evaluation attends to ( Prion and Haerling, 2020).:

- context ( place and purpose), background ( goals of the simulation, curricular fit and resources),

- design( learning objectives, fidelity, facilitator responses, participant and observer roles, activity progression, prebriefing and debriefing)

- simulation experiences ( experiential, interactive, collaborative, learner-centered),

- facilitator and educational strategies,

- participant attributes and outcomes ( reaction, learning and behavior).

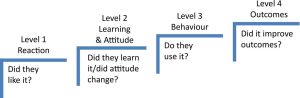

2. Kirkpatrick’s Levels of Evaluation

Kirkpatrick’s Model had been used as frame for designing different levels of evaluation in simulation education. It is a useful conceptual model for summarizing and analyzing efforts to measure the impact of simulation learning experiences. The model describes four sequential stages of assessment and evaluation efforts: Level 1-Reactions; Level 2-Learning; Level 3-Behaviors and Level 4-Results ( Prion and Haerling, 2021).

Watch this one-minute video:

Reflection Questions:

- Are evaluations done in your simulation program? If yes, at what level according to the Kirpatrick’s Model?

- What types of evaluations do we see mostly in current simulation education literature?

Prion and Haerling ( 2020) summarized the empirical evidence on Evaluation of Simulation Outcomes in this book chapter: Evaluation of Simulation Outcomes

Key Points:

- Early nursing research about simulation learning experiences focused on Level 1: Reactions. These studies focused on learner and instructor satisfaction, and perceived confidence and competence resulting from the simulation experience.

- The next attempt on SBE evaluation research focused on Level 2: Learning where researchers attempted to describe the type and amount of learning that resulted from a single or multiple simulation experience. Example: ” Almeir et al. (2006) ” the effect of scenario-based simulation training on nursing students’ clinical skills and competence”.

- Current literature abounds with Level 3: Behavior where evidence at this level demonstrates that learners can apply and transfer skills and knowledge from simulation, example” impact of simulation-enhanced patient fall instruction module on beginning nursing students.

- Demonstrating Level 4: Results and Outcomes as an effect of simulation experience is challenging, an ideal study need to show that simulation based experiences must result in improved patient outcomes in the patient care setting.

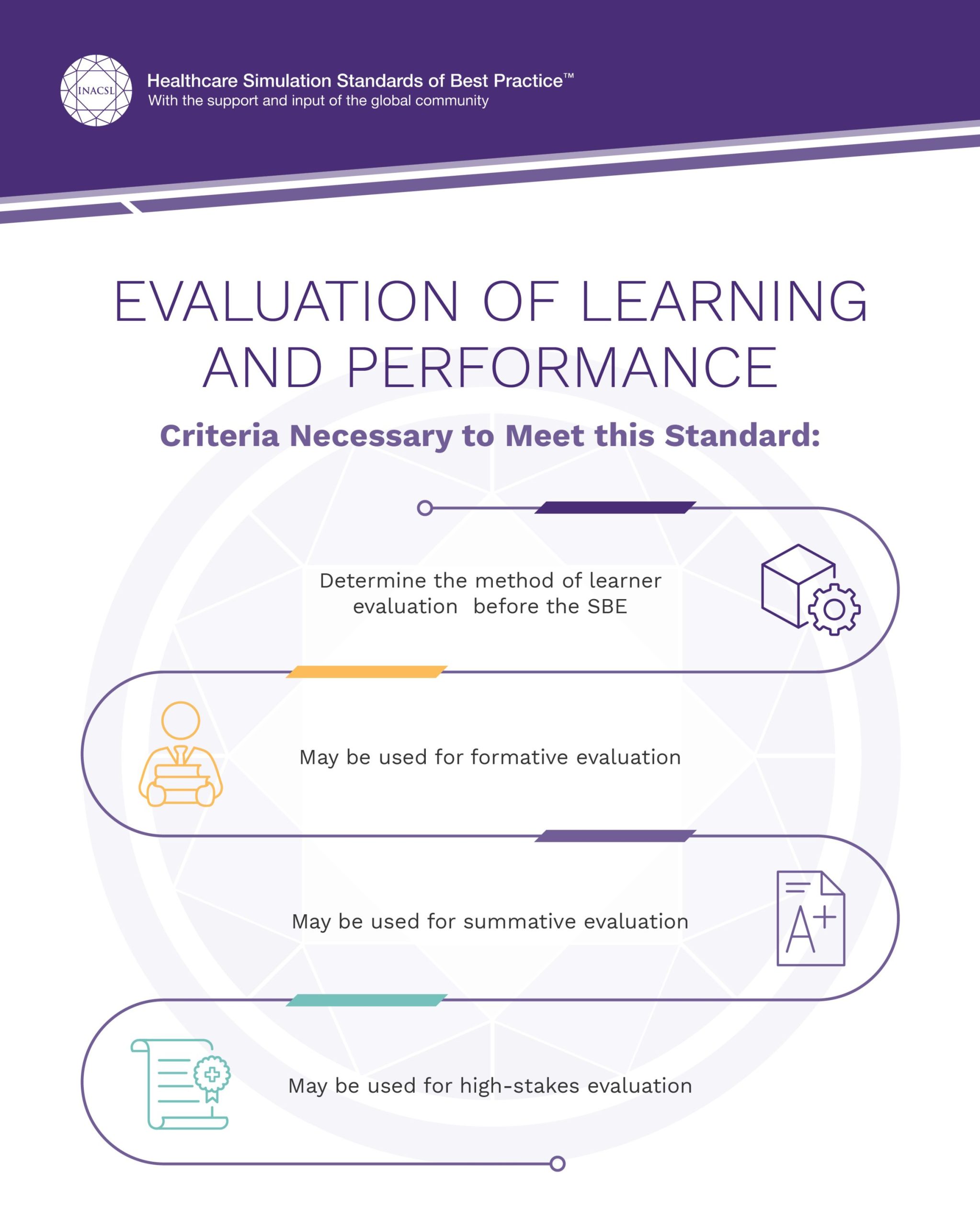

3. INACSL Healthcare Simulation Standards of Best Practice

The INACSL standards of practice in healthcare simulations present several key points on evaluation, and are mentioned below:

(Read this document for complete details: Healthcare Simulation Standards of Best PracticeTM Evaluation of Learning and Performance)

a. Defining terms used in evaluation of learning and performance in simulation:

- Formative evaluation of the learner is meant to foster development and assist in progression toward achieving objectives or outcomes.

- Summative evaluation focuses on the measurement of outcomes or achievement of objectives at a discrete moment in time, for example, at the end of a program of study.

-

- Note: Research has identified learning benefits to the observer as a learner in the simulation experience. If the learner is in an observer role in the SBE, the facilitator may consider evaluating the observer.

High-stakes evaluation refers to an assessment that has major implications or consequences based on the result or the outcome, such as merit pay, progression or grades.

- Note: Research has identified learning benefits to the observer as a learner in the simulation experience. If the learner is in an observer role in the SBE, the facilitator may consider evaluating the observer.

b. Elements of Learner Evaluation in SBE ( Palaganas, 2020):

In planning evaluation of simulation-based education, several elements need to be considered. These elements concretize the components of the NLN Jeffries Simulation Theory.

- Type of evaluation ( Kirkpatrick’s level; formative versus summative)

- Purposes of Evaluation

- Timing of the evaluation ( incorporated in simulation design)

- Use of valid and reliable evaluation tools

- Training of evaluators

- completion of the evaluation, interpretation of the results and provision of feedback to the learners

The purposes of evaluation in simulation based education ( Palaganas, et al, 2020), include but not limited to:

- Helping participants learn and identify what they have learned (or not learned).

- Identifying actual or potential problems, including shortfalls in participant learning or gaps in a specific simulation activity or program.

- Assigning participant scores.

- Improving current practices.

- Identifying how well or efficiently intended outcomes were achieved.

Examining our programs: In your simulation program,

- Is there an evaluation plan for learning and performance?

- How do we collect data for evaluation? When is data collected? What instruments are used? Do we know the psychometric properties of the instruments we use?

- What is/are the purposes of SBE evaluation?

- Are evaluators of simulation trained? How so?

- How do we use the data that are collected? Data management and analysis? Do we communicate results to students and faculty? Does SBE evaluation data impact curriculum, and other aspects of nursing program implementation?

II. Evaluation Instruments in SBE

Palaganas, et. al ( 2020) list several instruments used in simulation based education according to Kirkpatrick’s Levels:

- Level I : Reaction Affective: Satisfaction and Self-Confidence in Learning Scale (National League for Nursing, 2005) Emergency Response Confidence Tool (Arnold et al., 2009)

- Level II: Cognitive: Multiple choice exam questions such as from the Assessment Technologies Institute (ATI) Psychomotor: Skills checklists (Perry, Potter, & Elkin, 2012); Multiple domains: Lasater Clinical Judgment Rubric (Lasater, 2007) Sweeny-Clark Simulation Evaluation Rubric (Clark, 2006) Clinical Simulation Evaluation Tool (Radhakrishnan, Roche, & Cunningham, 2007) DARE2 Patient Safety Rubric (Walshe, O’Brien, Hartigan, Murphy, & Graham, 2014)

- Level III: Behavior Creighton Simulation Evaluation Instrument (Todd, Manz, Hawkins, Parsons, & Hercinger, 2008)- the latest version: Creighton Competency Evaluation Instrument

Examples of review articles and instrument websites that may be helpful for identifying possible evaluation instruments include:

• “An Updated Review of Published Simulation Evaluation Instruments,” by Adamson, Kardong-Edgren, and Willhaus, in Clinical Simulation in Nursing (2013)

• “Assessment of Human Patient Simulation-Based Learning,” by Bray, Schwartz, Odegard, Hammer, and Seybert, in American Journal of Pharmaceutical Education (2011)

• The Creighton Competency Evaluation Instrument website at CCEI

• The Debriefing Assessment for Simulation in Healthcare (DASH) website at DASH

• “Human Patient Simulation Rubrics for Nursing Education: Measuring the Essentials of Baccalaureate Education for Professional Nursing Practice,” by Davis and Kimble, in Journal of Nursing Education (2011)

• INACSL Repository of Instruments Used in Simulation Research website at INACSL respository of instruments

• “A Review of Currently Published Evaluation Instruments for Human Patient Simulation,” by Kardong-Edgren, Adamson, and Fitzgerald, in Clinical Simulation in Nursing (2010)

• “Tools for Direct Observation and Assessment of Clinical Skills,” by Kogan, Holmboe, and Hauer, in Journal of the American Medical Association (2009)

• Quality and Safety Education for Nurses (QSEN) Simulation Evaluation website at QSEN Simulation Evaluation website

• “The Contribution of High-Fidelity Simulation to Nursing Students’ Confidence and Competence,” by Yuan, Williams, and Fang, in International Nursing Review (2012)

III. Reliability , Validity and Other Considerations

Revisiting the concepts of reliability and validity:

…and more

Reflection Questions:

As simulation educators, what we can do to ensure the validity and reliability of our simulation evaluation tools?

- What are the psychometric properties of an instrument that will provide us views of its validity and reliability?

- Examine this tool: CCEI “Content validity ranged from 3.78 to 3.89 on a four-point Likert-like scale. Cronbach’s alpha was > .90 when used to score three different levels of simulation performance.”

- Will you consider this tool in assessing your learners’ performance in simulations?

- How does it compare to similar tools?

Knowledge Check:

This article explains the concepts of reliability and validity of evaluation tools in detail: Validating Assessment Tools in Simulation

Evaluation Activities: Using Creighton Competency Evaluation Instument( C-CEI)

Note the following activities below and Open this website: C-CEI

- Download the CCEI and the accompanying discussion worksheet

- Watch the training video

- Complete C-CEI worksheet to assess performance of learners in this planned simulation activity: Simulation Design: Medical Error

- Review simulation objectives, look at scenario progression and determine expected behaviors in a) formative evaluation b) summative evaluation

Additional/Optional Resources:

- Evaluation Strategies of SBE in a computer-based environment.

References:

Adamson, K. A., & Kardong-Edgren, S. (2012). A METHOD and Resources for ASSESSING the Reliability of Simulation Evaluation Instruments. Nursing Education Perspectives (National League for Nursing), 33(5), 334–339. https://doi-org.montgomerycollege.idm.oclc.org/10.5480/1536-5026-33.5.334

Adamson K. (2015). A Systematic Review of the Literature Related to the NLN/Jeffries Simulation Framework. Nursing education perspectives, 36(5), 281–291. https://doi.org/10.5480/15-1655

McMahon, E. et al. ( 2021). Healthcare Simulations Standards of Best Practice. Evaluation of Learning and Performance. Clinical Simulation in Nursing, (58), 54-56

Jeffries, P. ( 2021). The NLN Jeffries Simulation Theory 2nd Ed. Wolters Kluwer Health.

Palaganas, J.C, Ulrich, B.T & Mancini, M.E (2020). Mastering Simulation, Second Edition: Sigma.

Park, C. & Holtschneider, M. (2019). Evaluating Simulation Education. Journal for Nurses in Professional Development, 35 (6), 357-359. doi: 10.1097/NND.0000000000000593.

Prion, S., & Haerling, K. A. (2020). Evaluation of Simulation Outcomes. Annual review of nursing research, 39(1), 149–180. https://doi.org/10.1891/0739-6686.39.149